Mecanismo de mapeo no lineal basado en utilidades para mejorar las capas lineales en redes neuronales

DOI:

https://doi.org/10.36790/epistemus.v19i38.442Palabras clave:

Transformación Basada en Utilidad, Mapeo No Lineal de Pesos, Mejora de Redes Neuronales, Mecanismo Plug and Play, Reemplazo General de Capas LinealesResumen

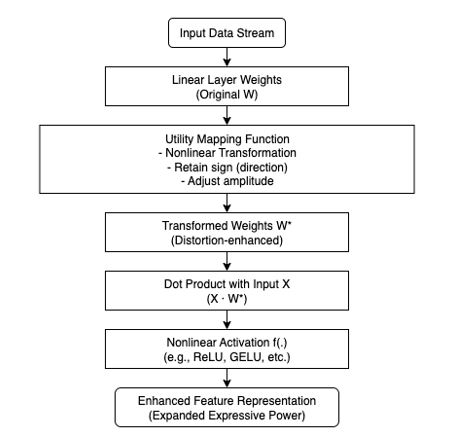

Este artículo propone un nuevo mecanismo universal de transformación lineal, inspirado en la teoría de utilidad de von Neumann-Morgenstern, conocido como el Mecanismo de Von Neumann-Morgenstern (VNM). Este mecanismo reconstruye estructuralmente la capa lineal de la red neuronal mediante la incorporación del método de "transformación de utilidad" a los pesos lineales tradicionales. Experimentos en la tarea de clasificación de imágenes CIFAR-10 han demostrado que el modelo que utiliza el mecanismo VNM presenta mejoras significativas en el rendimiento respecto a los métodos tradicionales en múltiples indicadores de evaluación, ya que muestra mayor estabilidad y capacidad de generalización. Este artículo destaca la amplia portabilidad del mecanismo y su idoneidad para módulos de transformación lineal en diversas estructuras de redes neuronales, lo que aporta una nueva idea para el diseño de modelos de aprendizaje profundo más eficaces.

Descargas

Citas

A. M. Atto, S. Galichet, D. Pastor, and N. Méger, “On joint parameterizations of linear and nonlinear functionals in neural networks,” Neural Netw., vol. 160, pp. 12–21, 2023. https://doi.org/10.1016/j.neunet.2022.12.019 DOI: https://doi.org/10.1016/j.neunet.2022.12.019

A. Mehrish, N. Majumder, R. Bharadwaj, R. Mihalcea, and S. Poria, “A review of deep learning techniques for speech processing,” Inf. Fusion, vol. 99, p. 101869, 2023. https://doi.org/10.1016/j.inffus.2023.101869 DOI: https://doi.org/10.1016/j.inffus.2023.101869

S. Khullar and N. Singh, “Water quality assessment of a river using deep learning Bi-LSTM methodology: forecasting and validation,” Environ. Sci. Pollut. Res., vol. 29, no. 9, pp. 12875–12889, 2022. https://doi.org/10.1007/s11356-021-13875-w DOI: https://doi.org/10.1007/s11356-021-13875-w

J. Z. HaoChen, C. Wei, A. Gaidon, and T. Ma, “Provable guarantees for self-supervised deep learning with spectral contrastive loss,” Adv. Neural Inf. Process. Syst., vol. 34, pp. 5000–5011, 2021.

R. Hernández Medina et al., “Machine learning and deep learning applications in microbiome research,” ISME Commun., vol. 2, no. 1, p. 98, 2022. https://doi.org/10.1038/s43705-022-00182-9 DOI: https://doi.org/10.1038/s43705-022-00182-9

Y. Li, B. Sixou, and F. Peyrin, “A review of the deep learning methods for medical images super resolution problems,” IRBM, vol. 42, no. 2, pp. 120–133, 2021. https://doi.org/10.1016/j.irbm.2020.08.004 DOI: https://doi.org/10.1016/j.irbm.2020.08.004

I. J. Jacob and P. E. Darney, “Design of deep learning algorithm for IoT application by image based recognition,” J. ISMAC, vol. 3, no. 03, pp. 276–290, 2021. https://doi.org/10.36548/jismac.2021.3.008 DOI: https://doi.org/10.36548/jismac.2021.3.008

H. Chen, Y. Wang, J. Guo, and D. Tao, “Vanillanet: the power of minimalism in deep learning,” Adv. Neural Inf. Process. Syst., vol. 36, pp. 7050–7064, 2023.

M. N. Fekri, H. Patel, K. Grolinger, and V. Sharma, “Deep learning for load forecasting with smart meter data: Online Adaptive Recurrent Neural Network,” Appl. Energy, vol. 282, p. 116177, 2021. https://doi.org/10.1016/j.apenergy.2020.116177 DOI: https://doi.org/10.1016/j.apenergy.2020.116177

W. Albattah et al., “A novel deep learning method for detection and classification of plant diseases,” Complex Intell. Syst., pp. 1–18, 2022. https://doi.org/10.1007/s40747-021-00536-1 DOI: https://doi.org/10.1007/s40747-021-00536-1

S. F. Ahmed et al., “Deep learning modelling techniques: current progress, applications, advantages, and challenges,” Artif. Intell. Rev., vol. 56, no. 11, pp. 13521–13617, 2023. https://doi.org/10.1007/s10462-023-10466-8 DOI: https://doi.org/10.1007/s10462-023-10466-8

G. Menghani, “Efficient deep learning: A survey on making deep learning models smaller, faster, and better,” ACM Comput. Surv., vol. 55, no. 12, pp. 1–37, 2023. https://doi.org/10.1145/3578938 DOI: https://doi.org/10.1145/3578938

A. Haridasan, J. Thomas, and E. D. Raj, “Deep learning system for paddy plant disease detection and classification,” Environ. Monit. Assess., vol. 195, no. 1, p. 120, 2023. https://doi.org/10.1007/s10661-022-10656-x DOI: https://doi.org/10.1007/s10661-022-10656-x

A. K. Sharma et al., “Classification of Indian classical music with time-series matching deep learning approach,” IEEE Access, vol. 9, pp. 102041–102052, 2021. https://doi.org/10.1109/ACCESS.2021.3093911 DOI: https://doi.org/10.1109/ACCESS.2021.3093911

S. Fresca and A. Manzoni, “POD-DL-ROM: Enhancing deep learning-based reduced order models for nonlinear parametrized PDEs by proper orthogonal decomposition,” Comput. Methods Appl. Mech. Eng., vol. 388, p. 114181, 2022. https://doi.org/10.1016/j.cma.2021.114181 DOI: https://doi.org/10.1016/j.cma.2021.114181

X. Chen et al., “Recent advances and clinical applications of deep learning in medical image analysis,” Med. Image Anal., vol. 79, p. 102444, 2022. https://doi.org/10.1016/j.media.2022.102444 DOI: https://doi.org/10.1016/j.media.2022.102444

Y. Zhang and Y. F. Li, “Prognostics and health management of Lithium-ion battery using deep learning methods: A review,” Renew. Sustain. Energy Rev., vol. 161, p. 112282, 2022. https://doi.org/10.1016/j.rser.2022.112282 DOI: https://doi.org/10.1016/j.rser.2022.112282

A. Mohan, A. K. Singh, B. Kumar, and R. Dwivedi, “Review on remote sensing methods for landslide detection using machine and deep learning,” Trans. Emerg. Telecommun. Technol., vol. 32, no. 7, e3998, 2021. https://doi.org/10.1002/ett.3998 DOI: https://doi.org/10.1002/ett.3998

M. Hakim et al., “A systematic review of rolling bearing fault diagnoses based on deep learning and transfer learning,” Ain Shams Eng. J., vol. 14, no. 4, p. 101945, 2023. https://doi.org/10.1016/j.asej.2022.101945 DOI: https://doi.org/10.1016/j.asej.2022.101945

C. C. Ukwuoma et al., “Recent advancements in fruit detection and classification using deep learning techniques,” Math. Probl. Eng., vol. 2022, no. 1, p. 9210947, 2022. https://doi.org/10.1155/2022/9210947 DOI: https://doi.org/10.1155/2022/9210947

R. Archana and P. E. Jeevaraj, “Deep learning models for digital image processing: a review,” Artif. Intell. Rev., vol. 57, no. 1, p. 11, 2024. https://doi.org/10.1007/s10462-023-10631-z DOI: https://doi.org/10.1007/s10462-023-10631-z

H. A. Helaly, M. Badawy, and A. Y. Haikal, “Deep learning approach for early detection of Alzheimer’s disease,” Cogn. Comput., vol. 14, no. 5, pp. 1711–1727, 2022. https://doi.org/10.1007/s12559-021-09946-2 DOI: https://doi.org/10.1007/s12559-021-09946-2

Y. Matsuzaka and Y. Uesawa, “A molecular image-based novel QSAR approach: deepsnap-deep learning,” Curr. Issues Mol. Biol., vol. 42, no. 1, pp. 455–472, 2021. https://doi.org/10.21775/cimb.042.455 DOI: https://doi.org/10.21775/cimb.042.455

S. Singh and A. Mahmood, “The NLP cookbook: modern recipes for transformer based deep learning architectures,” IEEE Access, vol. 9, pp. 68675–68702, 2021. https://doi.org/10.1109/ACCESS.2021.3077350 DOI: https://doi.org/10.1109/ACCESS.2021.3077350

T. L. Chaunzwa et al., “Deep learning classification of lung cancer histology using CT images,” Sci. Rep., vol. 11, no. 1, pp. 1–12, 2021. https://doi.org/10.1038/s41598-021-84630-x DOI: https://doi.org/10.1038/s41598-021-84630-x

H. Kaur, S. U. Ahsaan, B. Alankar, and V. Chang, “A proposed sentiment analysis deep learning algorithm for analyzing COVID-19 tweets,” Inf. Syst. Front., vol. 23, no. 6, pp. 1417–1429, 2021. https://doi.org/10.1007/s10796-021-10135-7 DOI: https://doi.org/10.1007/s10796-021-10135-7

C. Dimas, V. Alimisis, N. Uzunoglu, and P. P. Sotiriadis, “Advances in electrical impedance tomography inverse problem solution methods,” IEEE Access, vol. 12, pp. 47797–47829, 2024. https://doi.org/10.1109/ACCESS.2024.3382939 DOI: https://doi.org/10.1109/ACCESS.2024.3382939

U. S. Rao et al., “Deep learning precision farming: grapes and mango leaf disease detection by transfer learning,” Glob. Transit. Proc., vol. 2, no. 2, pp. 535–544, 2021. https://doi.org/10.1016/j.gltp.2021.08.002 DOI: https://doi.org/10.1016/j.gltp.2021.08.002

G. Roeder, L. Metz, and D. Kingma, “On linear identifiability of learned representations,” in Proc. Int. Conf. Mach. Learn. (ICML), pp. 9030–9039, Jul. 2021.

S. Yu and J. Ma, “Deep learning for geophysics: Current and future trends,” Rev. Geophys., vol. 59, no. 3, e2021RG000742, 2021. https://doi.org/10.1029/2021RG000742 DOI: https://doi.org/10.1029/2021RG000742

A. Gasparin, S. Lukovic, and C. Alippi, “Deep learning for time series forecasting: The electric load case,” CAAI Trans. Intell. Technol., vol. 7, no. 1, pp. 1–25, 2022. https://doi.org/10.1049/cit2.12060 DOI: https://doi.org/10.1049/cit2.12060

I. H. Sarker, “Deep cybersecurity: a comprehensive overview from neural network and deep learning perspective,” SN Comput. Sci., vol. 2, no. 3, p. 154, 2021. https://doi.org/10.1007/s42979-021-00535-6 DOI: https://doi.org/10.1007/s42979-021-00535-6

M. Li et al., “A deep learning method of water body extraction from high resolution remote sensing images with multisensors,” IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens., vol. 14, pp. 3120–3132, 2021. https://doi.org/10.1109/JSTARS.2021.3060769 DOI: https://doi.org/10.1109/JSTARS.2021.3060769

M. S. Ismail and R. Peeters, “A connection between von Neumann-Morgenstern expected utility and symmetric potential games,” Theory Decis., pp. 1–14, 2024. https://doi.org/10.2139/ssrn.4615620 DOI: https://doi.org/10.2139/ssrn.4615620

R. Van Den Brink and A. Rusinowska, “Degree centrality, von Neumann–Morgenstern expected utility and externalities in networks,” Eur. J. Oper. Res., vol. 319, no. 2, pp. 669–677, 2024. https://doi.org/10.1016/j.ejor.2024.06.042 DOI: https://doi.org/10.1016/j.ejor.2024.06.042

J. Lopez-Wild, “A computable von Neumann-Morgenstern representation theorem,” Synthese, vol. 205, no. 5, p. 182, 2025. https://doi.org/10.1007/s11229-025-04980-1 DOI: https://doi.org/10.1007/s11229-025-04980-1

N. N. Osipov, “Von Neumann–Morgenstern Axioms of Rationality and Inequalities in Analysis,” J. Math. Sci., vol. 285, no. 1, pp. 142–153, 2024. https://doi.org/10.1007/s10958-024-07439-9 DOI: https://doi.org/10.1007/s10958-024-07439-9

N. T. Wilcox, “Utility measurement: Some contemporary concerns,” in A Modern Guide to Philosophy of Economics, Edward Elgar, pp. 14–27, 2021. https://doi.org/10.4337/9781788974462.00007 DOI: https://doi.org/10.4337/9781788974462.00007

J. C. Francis, “Harry Markowitz’s contributions to utility theory,” Ann. Oper. Res., vol. 346, no. 1, pp. 113–125, 2025. https://doi.org/10.1007/s10479-024-06210-2 DOI: https://doi.org/10.1007/s10479-024-06210-2

J. C. Francis, “Reformulating prospect theory to become a von Neumann–Morgenstern theory,” Rev. Quant. Finance Account., vol. 56, no. 3, pp. 965–985, 2021. https://doi.org/10.1007/s11156-020-00915-8 DOI: https://doi.org/10.1007/s11156-020-00915-8

J. GarcÍa Cabello, “A New Decision Making Method for Selection of Optimal Data Using the Von Neumann-Morgenstern Theorem,” Informatica, vol. 34, no. 4, pp. 771–794, 2023. https://doi.org/10.15388/23-INFOR530 DOI: https://doi.org/10.15388/23-INFOR530

B. Hu and F. Yuan, "Utility-Probability Duality of Neural Networks," *arXiv preprint arXiv:2305.14859*, May 2023.

Descargas

Publicado

Cómo citar

Número

Sección

Licencia

Derechos de autor 2025 EPISTEMUS

Esta obra está bajo una licencia internacional Creative Commons Atribución-NoComercial-CompartirIgual 4.0.

La revista adquiere los derechos patrimoniales de los artículos sólo para difusión sin ningún fin de lucro, sin menoscabo de los propios derechos de autoría.

Los autores son los legítimos titulares de los derechos de propiedad intelectual de sus respectivos artículos, y en tal calidad, al enviar sus textos expresan su deseo de colaborar con la Revista Epistemus, editada semestralmente por la Universidad de Sonora.

Por lo anterior, de manera libre, voluntaria y a título gratuito, una vez aceptado el artículo para su publicación, ceden sus derechos a la Universidad de Sonora para que la Universidad de Sonora edite, publique, distribuya y ponga a disposición a través de intranets, internet o CD dicha obra, sin limitación alguna de forma o tiempo, siempre y cuando sea sin fines de lucro y con la obligación expresa de respetar y mencionar el crédito que corresponde a los autores en cualquier utilización que se haga del mismo.

Queda entendido que esta autorización no es una cesión o transmisión de alguno de sus derechos patrimoniales en favor de la mencionada institución. La UniSon le garantiza el derecho de reproducir la contribución por cualquier medio en el cual usted sea el autor, sujeto a que se otorgue el crédito correspondiente a la publicación original de la contribución en Epistemus.

Salvo indicación contraria, todos los contenidos de la edición electrónica se distribuyen bajo una licencia de uso y Attribution-NonCommercial-ShareAlike 4.0 International (CC BY-NC-SA 4.0) Puede consultar desde aquí la versión informativa y el texto legal de la licencia. Esta circunstancia ha de hacerse constar expresamente de esta forma cuando sea necesario.